Stable diffusion fine-tuning demos

Training stable-diffusion to generate new styles

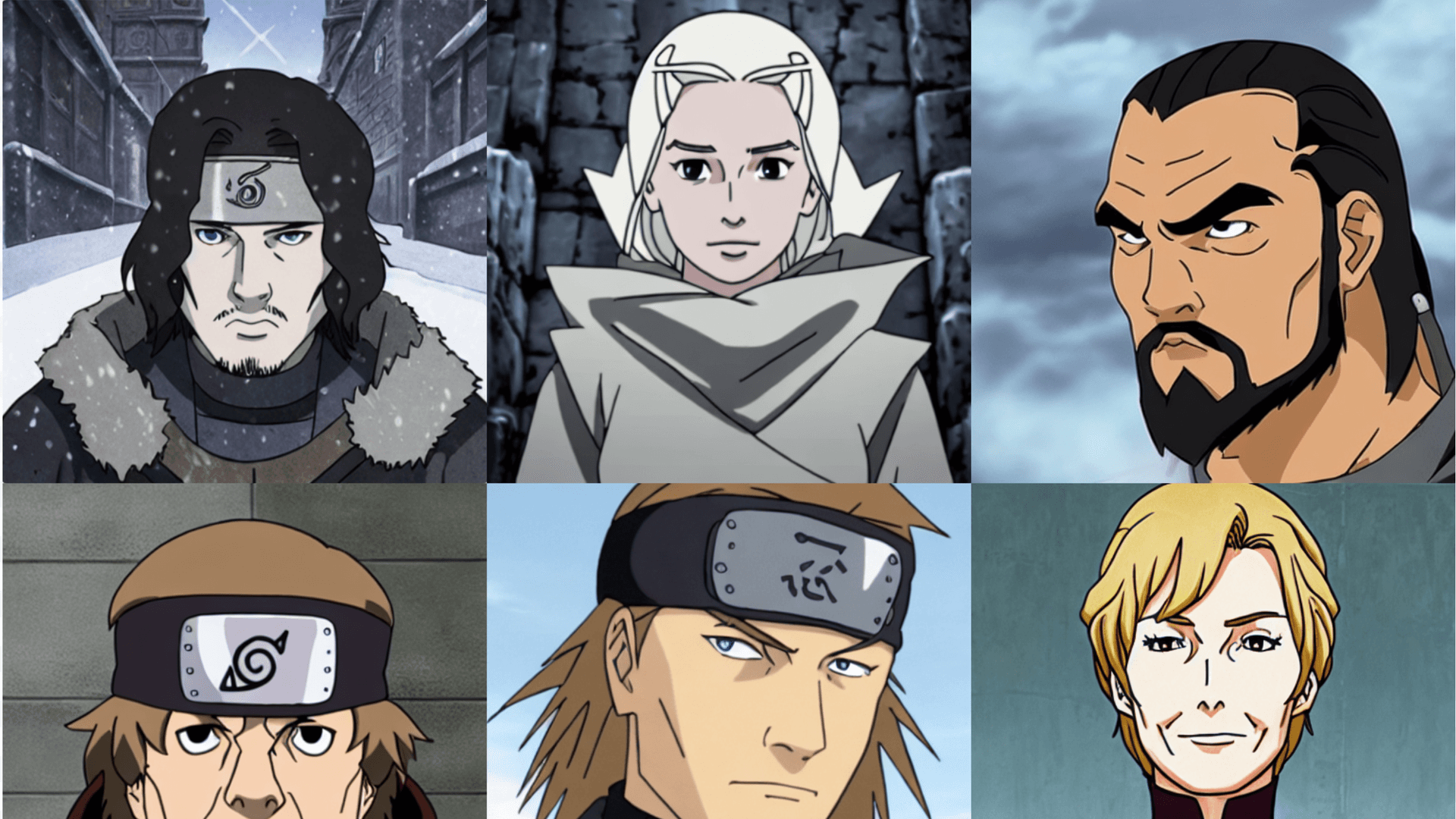

We present a couple examples of finetuning the text-to-image stable-diffusion model to learn new styles (e: “Naruto”, “Avatar”). Note that a similar methodology can be applied to learn the representation of a new object or identity to the base model.

Stable diffusion finetuning

Training data

The first step is to collect hundreds of data sample for the style to learn. The original images were obtained from narutopedia.com and captioned with the pre-trained BLIP model.

For each row the dataset contains image and text keys. image is a varying size PIL jpeg, and text is the accompanying text caption.

With the recent progresses of multimodal LLMs, I would probably use that instead for building labelled datasets from raw images.

The Naruto dataset can be found on HuggingFace for reference.

Stable diffusion finetuning with dreambooth

The second method uses dreambooth, a finetuning implementation of stable diffusion developped by Google.